Meeting the Challenges of the C-Level Executive to Board Director Transition

02/Jul/21 17:16

Life is full of transitions. Some are easy. But most are hard, for one reason or another. The good news of that for many transitions, plenty of guidance is available about how to succeed in making it. But it some cases, helpful, practical guidance is still lacking.

From our board consulting and personal experiences, Neil and I are painfully familiar with one of these: The challenge of transitioning from being a C-Level executive to being a board director. In this post, we’ll briefly summarize some of the key lessons we’ve learned.

A famous saying pithily claims that “management is about telling the answers, and governance is about asking the questions.” That’s definitely something to keep in mind, as well as the fact that many new directors (as well as some long serving ones) struggle to make that transition. But there’s more to it than that.

Broadly speaking, various courts have recognized that directors have two or three fundamental fiduciary duties.

The Duty of Loyalty says that directors must at all times act in the best interests of the company, and avoid personal economic conflict.

The Duty of Good Faith is a doctrine emerging out of various court decisions that requires fiduciaries to have subjectively honest and honorable intentions in all professional actions. Whether that is a separate, standalone duty or one that is subsumed under the duty of loyalty is still a matter of debate.

The Duty of Care refers to the principle that in making decisions, directors must act in the same manner as a reasonably prudent person in their position would.

In practice, the Duty of Care can be further disaggregated into seven key activities that boards perform:

(1) Establish the critical goals the organization must achieve to survive and thrive;

(2) Approve a strategy to reach those goals;

(3) Ensure that the allocation of financial and human resources aligns with that strategy;

(4) Hire a CEO to implement that strategy, and regularly evaluate their performance;

(5) Regularly review critical risks to the success of that strategy and the survival of the organization, and ensure they are being adequately managed;

(6) Ensure the timely and accurate reporting of results to stakeholders;

(7) In line with the “prudent man" rule, ensure that directors have received sufficient information and given it due consideration before making any decision.

In terms of content, C-Level executives transitioning to board directorship are already quite familiar with most of these. As noted above, the main challenge in these cases is developing a constructive Socratic approach -- learning to ask the questions rather than tell the answers.

However, in our experience there are two areas that can be a source of confusion and consternation.

Most C-Level executives tend to think of risk in operational, financial, regulatory, and reputational terms – basically, the standard contents of their previous company’s Enterprise Risk Management program. Typically such programs are organized around specific risks, whose probability of occurrence (over a usually undefined time horizon) and potential impact (sometimes netted against the assumed impact of mitigation measures, and sometimes not) are graphically summarized in the familiar two by two “heat map”. In the case of these risks, the board’s focus should be limited to the adequacy of the Enterprise Risk Management program.

The main focus of the board’s attention should be on strategic risks to the survival of the company and the success of its strategy.

There are good reasons for careful board focus in this area, including management compensation incentives that are strongly tied to upside value creation, not avoiding failure; a natural management focus on short term issues in an intensely competitive environment with constant pressure from investors to deliver high returns; and r recognition by many senior managers that their average job-tenure is growing ever-shorter.

This is not a criticism of management teams, who are rationally reacting to the situations and incentives they face. Rather, it highlights why strategic risk governance is a critical, if often underappreciated, board role.

Technically, these are usually not risks at all, in the sense of situations in which the full range of possible outcomes, and their associated probabilities and potential impacts are well understood (and thus can be priced and transferred to other organizations more willing to bear, for a fee, at least part of the company’s exposure to them). Instead, they are true uncertainties.

Governing strategic risk consists of three critical activities: anticipating future threats, assessing emerging threats (including their potential impact, and, critically, the speed at which they are developing), and ensuring that the organization is adapting to them in time.

The latter is similar to the familiar math problem involving the crossing point of two trains that are both accelerating. The first train is the emerging threat; the second is the process of developing and implementing adaptations before threat passes a critical threshold.

The second, potential source of frustration revolves around whether directors have received sufficient information and given it due consideration before making any decision.

C-Level executives know that sometimes decisions must be made under time pressure, in the face of uncertainty, with less than perfect information. Unlike management decisions, however, board decisions are subject to shareholder litigation (and sometimes regulatory review). It is for this reason that boards often retain independent outside advisors, such as investment banks in the case of mergers, acquisitions, and buyouts.

C-Level executives transitioning to directorship almost always have a strong track record of making good judgments in the face of uncertainty. For this reason, the need to think about information adequacy, alternative interpretations of the information at hand, questioning and validating assumptions, and ensuring that the board follows a clear decision process can easily lead to frustration.

Besides content challenges, new board directors may also face a number of process pitfalls that are deeply rooted in our evolutionary past.

At the individual level, we are naturally both overoptimistic and overconfident. Evolution has also predisposed us to choose people with these characteristics as group leaders.

We also unconsciously strive to maintain a coherent view of the world, and consequently pay less attention to, and underweight, bad news and information that does not fit well within our existing mental model of a system or situation. Most people seek information that confirms their beliefs, rather than information that calls them into question. This is accentuated when directors believe things are going well.

At the group level, when the fear center of our brain (the amygdala) is triggered by rising uncertainty or actual loss, our aversion to social isolation spikes, making us much more likely to conform to the views of a group and to resist voicing our concerns and/or sharing private information that conflicts with the dominant group view.

On boards, this tendency is further reinforced when directors come from similar social and educational backgrounds; a significant number of directors have experience in the organization’s sector, which can cause other directors to give excessive deference to their views, even when they are blind to the emergence of non-traditional threats; and/or the CEO has been in her/his role for a long time and harmonious relations exist between the board and the management team.

Last but certainly not least, the relationship between a Non-Executive Chairman (and the board more generally) and the CEO is, as we write in our research paper on this subject (download it here) a critical organizational bearing point on which long-term organizational survival and success rests.

This is another situation in which things that were quite obvious when one was a C-Level executive often cease to be so when one becomes a director.

In an era of unprecedented complexity and uncertainty, in which digitization and network effects are causing more and more industries to display “winner take all” dynamics, effective board governance has never been more important.

People with previous C-Level executive experience are well positioned to provide it. However, before they can make their critical contributions, there are challenges they must first overcome in the “not as easy as it looks” transition process to becoming an effective board director.

From our board consulting and personal experiences, Neil and I are painfully familiar with one of these: The challenge of transitioning from being a C-Level executive to being a board director. In this post, we’ll briefly summarize some of the key lessons we’ve learned.

A famous saying pithily claims that “management is about telling the answers, and governance is about asking the questions.” That’s definitely something to keep in mind, as well as the fact that many new directors (as well as some long serving ones) struggle to make that transition. But there’s more to it than that.

Broadly speaking, various courts have recognized that directors have two or three fundamental fiduciary duties.

The Duty of Loyalty says that directors must at all times act in the best interests of the company, and avoid personal economic conflict.

The Duty of Good Faith is a doctrine emerging out of various court decisions that requires fiduciaries to have subjectively honest and honorable intentions in all professional actions. Whether that is a separate, standalone duty or one that is subsumed under the duty of loyalty is still a matter of debate.

The Duty of Care refers to the principle that in making decisions, directors must act in the same manner as a reasonably prudent person in their position would.

In practice, the Duty of Care can be further disaggregated into seven key activities that boards perform:

(1) Establish the critical goals the organization must achieve to survive and thrive;

(2) Approve a strategy to reach those goals;

(3) Ensure that the allocation of financial and human resources aligns with that strategy;

(4) Hire a CEO to implement that strategy, and regularly evaluate their performance;

(5) Regularly review critical risks to the success of that strategy and the survival of the organization, and ensure they are being adequately managed;

(6) Ensure the timely and accurate reporting of results to stakeholders;

(7) In line with the “prudent man" rule, ensure that directors have received sufficient information and given it due consideration before making any decision.

In terms of content, C-Level executives transitioning to board directorship are already quite familiar with most of these. As noted above, the main challenge in these cases is developing a constructive Socratic approach -- learning to ask the questions rather than tell the answers.

However, in our experience there are two areas that can be a source of confusion and consternation.

Most C-Level executives tend to think of risk in operational, financial, regulatory, and reputational terms – basically, the standard contents of their previous company’s Enterprise Risk Management program. Typically such programs are organized around specific risks, whose probability of occurrence (over a usually undefined time horizon) and potential impact (sometimes netted against the assumed impact of mitigation measures, and sometimes not) are graphically summarized in the familiar two by two “heat map”. In the case of these risks, the board’s focus should be limited to the adequacy of the Enterprise Risk Management program.

The main focus of the board’s attention should be on strategic risks to the survival of the company and the success of its strategy.

There are good reasons for careful board focus in this area, including management compensation incentives that are strongly tied to upside value creation, not avoiding failure; a natural management focus on short term issues in an intensely competitive environment with constant pressure from investors to deliver high returns; and r recognition by many senior managers that their average job-tenure is growing ever-shorter.

This is not a criticism of management teams, who are rationally reacting to the situations and incentives they face. Rather, it highlights why strategic risk governance is a critical, if often underappreciated, board role.

Technically, these are usually not risks at all, in the sense of situations in which the full range of possible outcomes, and their associated probabilities and potential impacts are well understood (and thus can be priced and transferred to other organizations more willing to bear, for a fee, at least part of the company’s exposure to them). Instead, they are true uncertainties.

Governing strategic risk consists of three critical activities: anticipating future threats, assessing emerging threats (including their potential impact, and, critically, the speed at which they are developing), and ensuring that the organization is adapting to them in time.

The latter is similar to the familiar math problem involving the crossing point of two trains that are both accelerating. The first train is the emerging threat; the second is the process of developing and implementing adaptations before threat passes a critical threshold.

The second, potential source of frustration revolves around whether directors have received sufficient information and given it due consideration before making any decision.

C-Level executives know that sometimes decisions must be made under time pressure, in the face of uncertainty, with less than perfect information. Unlike management decisions, however, board decisions are subject to shareholder litigation (and sometimes regulatory review). It is for this reason that boards often retain independent outside advisors, such as investment banks in the case of mergers, acquisitions, and buyouts.

C-Level executives transitioning to directorship almost always have a strong track record of making good judgments in the face of uncertainty. For this reason, the need to think about information adequacy, alternative interpretations of the information at hand, questioning and validating assumptions, and ensuring that the board follows a clear decision process can easily lead to frustration.

Besides content challenges, new board directors may also face a number of process pitfalls that are deeply rooted in our evolutionary past.

At the individual level, we are naturally both overoptimistic and overconfident. Evolution has also predisposed us to choose people with these characteristics as group leaders.

We also unconsciously strive to maintain a coherent view of the world, and consequently pay less attention to, and underweight, bad news and information that does not fit well within our existing mental model of a system or situation. Most people seek information that confirms their beliefs, rather than information that calls them into question. This is accentuated when directors believe things are going well.

At the group level, when the fear center of our brain (the amygdala) is triggered by rising uncertainty or actual loss, our aversion to social isolation spikes, making us much more likely to conform to the views of a group and to resist voicing our concerns and/or sharing private information that conflicts with the dominant group view.

On boards, this tendency is further reinforced when directors come from similar social and educational backgrounds; a significant number of directors have experience in the organization’s sector, which can cause other directors to give excessive deference to their views, even when they are blind to the emergence of non-traditional threats; and/or the CEO has been in her/his role for a long time and harmonious relations exist between the board and the management team.

Last but certainly not least, the relationship between a Non-Executive Chairman (and the board more generally) and the CEO is, as we write in our research paper on this subject (download it here) a critical organizational bearing point on which long-term organizational survival and success rests.

This is another situation in which things that were quite obvious when one was a C-Level executive often cease to be so when one becomes a director.

In an era of unprecedented complexity and uncertainty, in which digitization and network effects are causing more and more industries to display “winner take all” dynamics, effective board governance has never been more important.

People with previous C-Level executive experience are well positioned to provide it. However, before they can make their critical contributions, there are challenges they must first overcome in the “not as easy as it looks” transition process to becoming an effective board director.

Comments

COVID Has Laid Bare Too Many Leaders' Lack of Critical Thinking Skills

21/May/21 14:34

One definition of critical thinking is “the use of a rigorous process to reach justifiable inferences.” The actions of various officials during the COVID pandemic have made painfully clear that it is a skill in very short supply.

In the United States, the ongoing debate over when to reopen schools for in-person instruction has put paid to K12 leaders’ frequent claim that they teach students how to think critically.

The battle over school reopening in the United States is a perfect case study.

Example #1: Framing of the reopening issue has ignored basic principles of inductive reasoning

Teachers unions and their supporters have basically demanded that district and state leaders (not to mention parents), “prove to us that it is safe to return to school.” And that is just what most proponents of reopening schools have tried to do.

Unfortunately, this approach runs smack into the so-called “problem of induction”, that was first identified by the philosopher David Hume his “Treatise on Human Nature”, published in 1739: No amount of evidence can ever conclusively prove that a hypothesis is true.

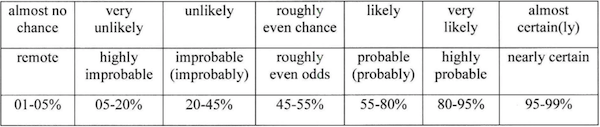

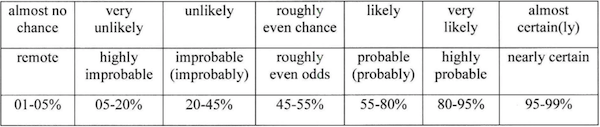

To be sure, there are techniques available for systematically weighing evidence to adjust your confidence in the likelihood that a hypothesis is true, such as the Baconian, Bayesian, and Dempster-Shafer methods. But I can find no examples of these methods being applied in any district’s debate about reopening schools.

Instead, parents and employers have repeatedly been treated to the ugly spectacle of both sides of this debate randomly hurling different studies at each other, without any attempt to systematically weigh the evidence they provide.

Nor up until recently have we seen any attempts to use Karl Popper’s approach to avoiding Hume’s problem of induction: Using evidence to falsify rather than prove a claim.

Fortunately this has begun to change, as more evidence accumulates that schools are not dangerous vectors of COVID transmission.

Example #2: Deductive reasoning has been absent

In response, reopening opponents have made a new claim: That in-person instruction is still not safe because of the prevailing rate of positive COVID tests and/or case numbers in the community surrounding the school district.

This has triggered an endless argument about what the community positive rate means for the safety of in-person instruction.

This argument will never end unless and until the warring parties start to complement induction with deductive reasoning — in this case, actually modeling the multiple factors that affect the level of COVID infection risk in school classrooms.

In addition to assumptions about the relative importance of different infection vectors (surface contact, droplet, and aerosols), and the community infection rate (which drives the probability that a student or adult at a school will be COVID positive and asymptomatic), other factors include the cubic feet of space per person in a classroom, the activity being performed (e.g., singing versus a lecture), the length of time a group is in the classroom, and HVAC system parameters (air changes per hour, percentage of outside air exchanged, type of filters in use, windows open/closed, etc.).

Yet I have yet to see this type of modeling systematically incorporated into state and school district discussions about how to measure and manage reopening risks. Unsurprisingly, it also seems to have been completely ignored by the teachers unions.

In the future, every party making claims and/or decisions about school reopening and COVID risk should have to answer these three questions, which have rarely been asked:

(1) What variables are you using in your model of in-school COVID infection risk?

(2) What assumptions are you making about the values of these variables, and how they interact to determine the level of infection risk?

(3) On what evidence are your assumptions based?

Example #3: Few if any forecast-based claims made during the debate of school reopening have been accompanied by estimates of the degree of uncertainty associated with them

Broadly speaking, there are four categories of uncertainty associated with any forecast.

First, there is uncertainty arising from the applicability of a given theory to the situation at hand.

For example, initial forecasts for the spread of COVID were based on the standard “Susceptible – Infected – Recovered” or “SIR” model of infectious disease epidemics. This model assumed that a homogenous population of agents would randomly encounter each other. With some probability, encounters between infected and non-infected agents would produce more infections. Some percentage of infected agents would die, and some would recover, and thereafter become immune to additional infection.

As it has turned out, the standard model’s assumptions did not match the reality of the COVID epidemic. For example, the population was not homogenous – some had characteristics (like age) and conditions (like asthma, obesity) that made them much more likely to become infected and die. Nor were encounters between infected and non-infected agents random – different people followed different patterns -- like riding the subway each day – that created higher and lower risks of infection, or infecting others (i.e., the impact of “superspreaders”). Finally, in the case of COVID, surviving infection did not make people immune to future infections (e.g., with a new variant of SARS-CoV-2) or infecting others.

Second, there is uncertainty associated with the way a theory is translated into a quantitative forecasting model. In the case of COVID, one of the challenges was how to model the impact of lockdowns and varying rates of compliance with them.

Third, there is uncertainty about what values to put on various parameters contained in a model – for example, to take into account the range of possible impacts that superspreaders could have.

Fourth, there is uncertainty associated with the possible presence of calculation errors within the model, particularly in light of research that has found that a substantial number of models have them (this is why more and more organizations now have separate Model Validation and Verification teams).

Example #4: Authorities’ decision processes have not clearly defined, acknowledged, and systematically traded off different parties’ competing goals.

The Wharton School at the University of Pennsylvania has produced an eye-opening economic analysis of the school reopening issue, modeling both students lost lifetime earnings due to school closure and the cost of COVID infection risk, using the same type of “statistical value of a life” used in other public risk analyses (e.g., of the costs and benefits of raising speed limits).

This analysis finds that, assuming minimal learning versus in-classroom instruction and no-recovery of learning losses, students lose between $12,000 and $15,000 in lifetime earnings for each month that schools remain closed.

To be conservative, let’s assume that due to somewhat effective remote instruction and recovery of learning losses, the average earnings hit is “only” $6,000 per month, and that schools “only” remain closed for nine months (three in the spring of 2020, and six during this school year). In a district of 25,000 students, the economic cost of unrecovered student learning losses is $1.4 billion. You read that right: $1.4 billion.

And that doesn’t include the cost of job losses (usually by mothers) caused by extended period of remote learning.

Given the high cost to students, the Wharton team concluded that it only makes sense to continue remote learning if in-person instruction would plausibly cause .355 new community COVID cases per student. And there is no evidence that this is the case.

However, I have yet to hear this long-term cost to students or this tradeoff mentioned in leaders’ discussions about returning to in-person instruction.

Instead, I’ve seen teachers unions opposed to returning to in-person instruction roll out the same playbook they routinely use in discussions about tenure and dismissal of poorly performing teachers.

This argument is based on the concept of Type-1 and Type-2 errors when testing a hypothesis. Errors of commission are Type-1 errors, also known as “false alarms.” Errors of omission are Type-2 errors, or “missed alarms.” There is an unavoidable trade-off between them — the more you reduce the likelihood of errors of commission, the more you increase the probability of errors of omission.

Here’s a real life example: If you incorrectly identify a teacher as poorly performing and dismiss them, you have made an error of commission. If you incorrectly fail to identify a poorly performing teacher and therefore fail to dismiss them, you have committed an error of omission.

Unfortunately, the cost of these two errors is highly asymmetrical. Teachers unions claim tenure is necessary to minimize the chance of errors of commission — wrongfully dismissing a teacher who is not poorly performing. They completely neglect the cost of the corresponding increase in the probability of errors of omission — failing to dismiss poor performers.

As Chetty, Friedman, and Rockhoff found in “Measuring the Impacts of Teachers”, this cost is extremely high — each student suffers an estimated lifetime earnings loss of $52,000. Assuming the poor teacher has a class of 25 students each year for 30 years, the total cost is $39 million.

We face the same tradeoff between errors of commission and omission in the school reopening decision. But yet again, we are failing to think critically about it, by explicitly discussing different parties’ competing goals, and how politicians and district leaders should weigh them in their decision process.

To reduce the probability of errors of commission (teachers becoming infected with COVID in school), teachers unions are refusing to return to in-person instruction until the risk of infection has effectively been eliminated. In turn, they expect students, parents, employers, and society to bear the burden of the far higher cost of the corresponding error of omission: failing to return to school when it was safe to do so. This cost is plausibly estimated to run into the high billions, if not trillions on the national level.

The predictable response of some who read this third critique of their lack of critical thinking is to once again toss critical thinking aside, and implausibly deny that students’ learning losses exist, or claim that they will easily be recovered.

Example #5: District decision makers have also fallen into other “wicked problem” traps

Dr. Anne-Marie Grisogono recently retired from the Australia Department of Defence’s Science and Technology Organization. She is one of the world’s leading experts on complex adaptive systems and the wicked problems that emerge from them.

Wicked problems are “characterized by multiple interdependent goals that are often poorly framed, unrealistic or conflicted, vague or not explicitly stated. Moreover, stakeholders will often disagree on the weights to place on the different goals, or change their minds.” When the pandemic arrived, leaders faced a classic wicked problem.

In a paper published last year (“How Could Future AI Help Tackle Global Complex Problems?") Grisogono described the traps that decision makers usually fall into when struggling with a wicked problem.

These will surprise nobody who has watched most school district decision makers during the pandemic.

One trap is structuring a complex decision process such that nobody involved is responsible for explicitly trading off competing goals Put differently, the buck stops at nobody’s desk. In the case of COVID, we have repeatedly seen health officials make decisions (e.g., imposing lockdowns) based solely on minimizing the risk of infections, without regard to associated economic, mental health, and student learning losses involved.

Grisogono describes other traps that have also been much in evidence during the COVID pandemic.

“Low ambiguity tolerance was found to be a significant factor in precipitating the behavior of prematurely jumping to conclusions about the nature of the problem and what was to be done about it, despite considerable uncertainty…

“The chosen (usually ineffective) course of action was then defended and persevered due to a combination of confirmation bias, commitment bias, and loss aversion, in spite of accumulating contradictory evidence.

“The unfolding disaster was compounded by a number of other reasoning shortcomings such as difficulties in steering processes with long time delays and in projecting cumulative and non-linear processes.”

Conclusion

As I said at the beginning of this post, one definition of critical thinking is “the use of a rigorous process to reach justifiable inferences.”

Unfortunately, there is abundant and damning evidence that critical thinking has been notable by its absence among too many leaders who have been making critical decisions in the fact of complexity, uncertainty, and time pressure during the course of the COVID pandemic. And millions of people have paid the price.

In the United States, the ongoing debate over when to reopen schools for in-person instruction has put paid to K12 leaders’ frequent claim that they teach students how to think critically.

The battle over school reopening in the United States is a perfect case study.

Example #1: Framing of the reopening issue has ignored basic principles of inductive reasoning

Teachers unions and their supporters have basically demanded that district and state leaders (not to mention parents), “prove to us that it is safe to return to school.” And that is just what most proponents of reopening schools have tried to do.

Unfortunately, this approach runs smack into the so-called “problem of induction”, that was first identified by the philosopher David Hume his “Treatise on Human Nature”, published in 1739: No amount of evidence can ever conclusively prove that a hypothesis is true.

To be sure, there are techniques available for systematically weighing evidence to adjust your confidence in the likelihood that a hypothesis is true, such as the Baconian, Bayesian, and Dempster-Shafer methods. But I can find no examples of these methods being applied in any district’s debate about reopening schools.

Instead, parents and employers have repeatedly been treated to the ugly spectacle of both sides of this debate randomly hurling different studies at each other, without any attempt to systematically weigh the evidence they provide.

Nor up until recently have we seen any attempts to use Karl Popper’s approach to avoiding Hume’s problem of induction: Using evidence to falsify rather than prove a claim.

Fortunately this has begun to change, as more evidence accumulates that schools are not dangerous vectors of COVID transmission.

Example #2: Deductive reasoning has been absent

In response, reopening opponents have made a new claim: That in-person instruction is still not safe because of the prevailing rate of positive COVID tests and/or case numbers in the community surrounding the school district.

This has triggered an endless argument about what the community positive rate means for the safety of in-person instruction.

This argument will never end unless and until the warring parties start to complement induction with deductive reasoning — in this case, actually modeling the multiple factors that affect the level of COVID infection risk in school classrooms.

In addition to assumptions about the relative importance of different infection vectors (surface contact, droplet, and aerosols), and the community infection rate (which drives the probability that a student or adult at a school will be COVID positive and asymptomatic), other factors include the cubic feet of space per person in a classroom, the activity being performed (e.g., singing versus a lecture), the length of time a group is in the classroom, and HVAC system parameters (air changes per hour, percentage of outside air exchanged, type of filters in use, windows open/closed, etc.).

Yet I have yet to see this type of modeling systematically incorporated into state and school district discussions about how to measure and manage reopening risks. Unsurprisingly, it also seems to have been completely ignored by the teachers unions.

In the future, every party making claims and/or decisions about school reopening and COVID risk should have to answer these three questions, which have rarely been asked:

(1) What variables are you using in your model of in-school COVID infection risk?

(2) What assumptions are you making about the values of these variables, and how they interact to determine the level of infection risk?

(3) On what evidence are your assumptions based?

Example #3: Few if any forecast-based claims made during the debate of school reopening have been accompanied by estimates of the degree of uncertainty associated with them

Broadly speaking, there are four categories of uncertainty associated with any forecast.

First, there is uncertainty arising from the applicability of a given theory to the situation at hand.

For example, initial forecasts for the spread of COVID were based on the standard “Susceptible – Infected – Recovered” or “SIR” model of infectious disease epidemics. This model assumed that a homogenous population of agents would randomly encounter each other. With some probability, encounters between infected and non-infected agents would produce more infections. Some percentage of infected agents would die, and some would recover, and thereafter become immune to additional infection.

As it has turned out, the standard model’s assumptions did not match the reality of the COVID epidemic. For example, the population was not homogenous – some had characteristics (like age) and conditions (like asthma, obesity) that made them much more likely to become infected and die. Nor were encounters between infected and non-infected agents random – different people followed different patterns -- like riding the subway each day – that created higher and lower risks of infection, or infecting others (i.e., the impact of “superspreaders”). Finally, in the case of COVID, surviving infection did not make people immune to future infections (e.g., with a new variant of SARS-CoV-2) or infecting others.

Second, there is uncertainty associated with the way a theory is translated into a quantitative forecasting model. In the case of COVID, one of the challenges was how to model the impact of lockdowns and varying rates of compliance with them.

Third, there is uncertainty about what values to put on various parameters contained in a model – for example, to take into account the range of possible impacts that superspreaders could have.

Fourth, there is uncertainty associated with the possible presence of calculation errors within the model, particularly in light of research that has found that a substantial number of models have them (this is why more and more organizations now have separate Model Validation and Verification teams).

Example #4: Authorities’ decision processes have not clearly defined, acknowledged, and systematically traded off different parties’ competing goals.

The Wharton School at the University of Pennsylvania has produced an eye-opening economic analysis of the school reopening issue, modeling both students lost lifetime earnings due to school closure and the cost of COVID infection risk, using the same type of “statistical value of a life” used in other public risk analyses (e.g., of the costs and benefits of raising speed limits).

This analysis finds that, assuming minimal learning versus in-classroom instruction and no-recovery of learning losses, students lose between $12,000 and $15,000 in lifetime earnings for each month that schools remain closed.

To be conservative, let’s assume that due to somewhat effective remote instruction and recovery of learning losses, the average earnings hit is “only” $6,000 per month, and that schools “only” remain closed for nine months (three in the spring of 2020, and six during this school year). In a district of 25,000 students, the economic cost of unrecovered student learning losses is $1.4 billion. You read that right: $1.4 billion.

And that doesn’t include the cost of job losses (usually by mothers) caused by extended period of remote learning.

Given the high cost to students, the Wharton team concluded that it only makes sense to continue remote learning if in-person instruction would plausibly cause .355 new community COVID cases per student. And there is no evidence that this is the case.

However, I have yet to hear this long-term cost to students or this tradeoff mentioned in leaders’ discussions about returning to in-person instruction.

Instead, I’ve seen teachers unions opposed to returning to in-person instruction roll out the same playbook they routinely use in discussions about tenure and dismissal of poorly performing teachers.

This argument is based on the concept of Type-1 and Type-2 errors when testing a hypothesis. Errors of commission are Type-1 errors, also known as “false alarms.” Errors of omission are Type-2 errors, or “missed alarms.” There is an unavoidable trade-off between them — the more you reduce the likelihood of errors of commission, the more you increase the probability of errors of omission.

Here’s a real life example: If you incorrectly identify a teacher as poorly performing and dismiss them, you have made an error of commission. If you incorrectly fail to identify a poorly performing teacher and therefore fail to dismiss them, you have committed an error of omission.

Unfortunately, the cost of these two errors is highly asymmetrical. Teachers unions claim tenure is necessary to minimize the chance of errors of commission — wrongfully dismissing a teacher who is not poorly performing. They completely neglect the cost of the corresponding increase in the probability of errors of omission — failing to dismiss poor performers.

As Chetty, Friedman, and Rockhoff found in “Measuring the Impacts of Teachers”, this cost is extremely high — each student suffers an estimated lifetime earnings loss of $52,000. Assuming the poor teacher has a class of 25 students each year for 30 years, the total cost is $39 million.

We face the same tradeoff between errors of commission and omission in the school reopening decision. But yet again, we are failing to think critically about it, by explicitly discussing different parties’ competing goals, and how politicians and district leaders should weigh them in their decision process.

To reduce the probability of errors of commission (teachers becoming infected with COVID in school), teachers unions are refusing to return to in-person instruction until the risk of infection has effectively been eliminated. In turn, they expect students, parents, employers, and society to bear the burden of the far higher cost of the corresponding error of omission: failing to return to school when it was safe to do so. This cost is plausibly estimated to run into the high billions, if not trillions on the national level.

The predictable response of some who read this third critique of their lack of critical thinking is to once again toss critical thinking aside, and implausibly deny that students’ learning losses exist, or claim that they will easily be recovered.

Example #5: District decision makers have also fallen into other “wicked problem” traps

Dr. Anne-Marie Grisogono recently retired from the Australia Department of Defence’s Science and Technology Organization. She is one of the world’s leading experts on complex adaptive systems and the wicked problems that emerge from them.

Wicked problems are “characterized by multiple interdependent goals that are often poorly framed, unrealistic or conflicted, vague or not explicitly stated. Moreover, stakeholders will often disagree on the weights to place on the different goals, or change their minds.” When the pandemic arrived, leaders faced a classic wicked problem.

In a paper published last year (“How Could Future AI Help Tackle Global Complex Problems?") Grisogono described the traps that decision makers usually fall into when struggling with a wicked problem.

These will surprise nobody who has watched most school district decision makers during the pandemic.

One trap is structuring a complex decision process such that nobody involved is responsible for explicitly trading off competing goals Put differently, the buck stops at nobody’s desk. In the case of COVID, we have repeatedly seen health officials make decisions (e.g., imposing lockdowns) based solely on minimizing the risk of infections, without regard to associated economic, mental health, and student learning losses involved.

Grisogono describes other traps that have also been much in evidence during the COVID pandemic.

“Low ambiguity tolerance was found to be a significant factor in precipitating the behavior of prematurely jumping to conclusions about the nature of the problem and what was to be done about it, despite considerable uncertainty…

“The chosen (usually ineffective) course of action was then defended and persevered due to a combination of confirmation bias, commitment bias, and loss aversion, in spite of accumulating contradictory evidence.

“The unfolding disaster was compounded by a number of other reasoning shortcomings such as difficulties in steering processes with long time delays and in projecting cumulative and non-linear processes.”

Conclusion

As I said at the beginning of this post, one definition of critical thinking is “the use of a rigorous process to reach justifiable inferences.”

Unfortunately, there is abundant and damning evidence that critical thinking has been notable by its absence among too many leaders who have been making critical decisions in the fact of complexity, uncertainty, and time pressure during the course of the COVID pandemic. And millions of people have paid the price.

The BIN Model of Forecasting Errors and Its Implications for Improving Predictive Accuracy

08/Apr/21 07:52

At Britten Coyne Partners, Index Investor, and the Strategic Risk Institute, we all share a common mission: To help clients avoid failure by better anticipating, more accurately assessing, and adapting in time to emerging strategic threats.

Improving clients’ (and our own) forecasting process to increase predictive accuracy is critical to our mission and our research in this area is ongoing. In this note, we’ll review a very interesting new paper that has the potential to significantly improve forecast accuracy.

In “Bias, Information, Noise: The BIN Model of Forecasting”, Satopaa, Salikhov, Tetlock, and Mellers introduce a new approach to rigorously assessing the impact of three root causes of forecasting errors. In so doing, they create the opportunity for individuals and organizations to take more carefully targeted actions to improve the predictive accuracy of their forecasts.

In the paper, Satopaa et al decompose forecast errors into three parts, based on the impact of bias, partial information, and noise. They assume that, “forecasters sample and interpret signals with varying skill and thoroughness. They may sample relevant signals (increasing partial information) or irrelevant signals (creating noise). Furthermore, they may center the signals incorrectly (creating bias).”

Let’s start by taking a closer look at each root cause.

WHAT IS BIAS?

A bias is a systematic error that reduces forecast accuracy in a predictable way. Researchers have extensively studied biases and many of them have become well known. Here are some examples:

Over-Optimism: Tali Sharot’s research has shown how humans have a natural bias towards optimism. We are much more prone to updating our beliefs when a new piece of information is positive (i.e., better than expected in light of our goals) rather than negative (“How Unrealistic Optimism is Maintained in the Face of Reality”).

Confirmation: We tend to seek, pay more attention to, and place more weight on information that supports our current beliefs than information that is inconsistent with or contradicts them (this is also known as “my-side” bias).

Social Ranking: Another bias that is deeply rooted in our evolutionary past is the predictable impact of competition for status within a group. Researchers have found that when the result of a decision will be private (not observed by others), we tend to be risk averse. But when the result will be observed, we tend to be risk seeking (e.g., “Interdependent Utilities: How Social Ranking Affects Choice Behavior” by Bault et al).

Social Conformity: Another evolutionary instinct comes into play when uncertainty is high. Under this condition, we are much more likely to rely on social learning and copying the behavior of other group members, and to put less emphasis on any private information we have that is inconsistent with or contradicts the group’s dominant view. The evolutionary basis for this heightened conformity is clear – you don’t want to be cast out of your group when uncertainty is high.

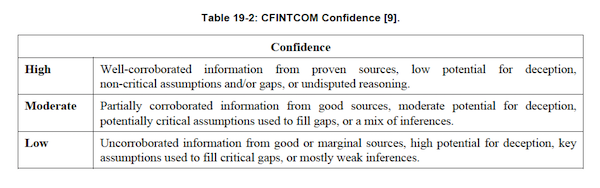

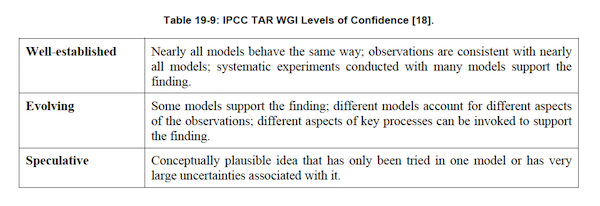

Overconfidence/Uncertainty Neglect: The over-optimism, confirmation, social ranking, and social conformity biases all contribute to forecasters’ systematic neglect of when we make and communicate forecasts. We described this bias (and how to overcome it) much more detail in our February blog post, “How to Effectively Communicate Forecast Probability and Analytic Confidence”.

Surprise Neglect: While less well known than many other biases, this one is, in our experience, one of the most important. Surprise is a critically important feeling that is triggered when our conscious or unconscious attention is attracted to something that violates our expectations of how the world should behave, given our mental model of the phenomenon in question (e.g., stock market valuations increasing when macroeconomic conditions appear to be getting worse). From an evolutionary perspective, surprise helps humans to survive by forcing them to revise their mental models in order to more accurately perceive the world – especially its unexpected dangers and opportunities. Unfortunately, the feeling of surprise is often fleeting.

As Daniel Kahneman noted in his book, “Thinking Fast and Slow”, when confronted with surprise, our automatic, subconscious reasoning system (“System 1”) will quickly attempt to adjust our beliefs to eliminate the feeling. It is only when the adjustment is too big that our conscious reasoning system (“System 2”) is triggered to examine its cause us to feel surprised – but even then, System 1 will still keep trying to make the feeling of surprise disappear. Surprise neglect is one of the most underappreciated reasons that inaccurate mental models tend to persist.

WHAT IS PARTIAL INFORMATION?

In the context of the BIN Model, “information” refers to the extent to which we have complete (and accurate) information about the process generating the future results we seek to forecast.

For example, when a fair coin is flipped four times, we have complete information that enables us to predict the probability of different outcomes with complete confidence. This is the realm of decision in the face of risk.

When the complexity of the results generating process increases, we move from the realm of risk into the realm of uncertainty, in which we often do not fully understand the full range of possible outcomes, their probabilities, or their consequences.

Under these circumstances, forecasters have varying degrees of information about the process generating future results, and/or models of varying degrees of accuracy for interpreting the meaning of the information they have. Both contribute to forecast inaccuracy.

WHAT IS NOISE?

“Noise” is unsystematic, unpredictable, random errors that contribute to forecast inaccuracy. Kahneman defines it as “the chance variability of judgments.”

Sources of noise that are external to forecasters include randomness in the results generating process itself (and, as in the case of complex adaptive systems, the deliberate actions of the intelligent agents who comprise that process). Internal sources of noise include forecasters’ use of low or no value information about and/or a model of a results generating process that are either inaccurate or irrelevant, or that vary over time (often unconsciously).

After applying their analytical “BIN” framework to the results of the Good Judgment Project (a four-year geopolitical forecasting tournament described in the book “Superforecasting” in which one of us participated), Satopaa and his co-authors conclude that, “forecasters fall short of perfect forecasting [accuracy] due more to noise than bias or lack of information. Eliminating noise would reduce forecast errors … by roughly 50%; eliminating bias would yield a roughly 25% cut; [and] increasing information would account for the remaining 25%. In sum, from a variety of analytical angles, reducing noise is roughly twice as effective as reducing bias or increasing information.”

Moreover, they authors found that a variety of interventions used by the Good Judgment Project that were intended to reduce forecaster bias (such as training and teaming) actually had their biggest impact on reducing noise. They note that, “reducing bias may be harder than reducing noise due to the tenacious nature of certain cognitive biases” (a point also made by Kahneman in his Harvard Business Review article, “Noise: How to Overcome the High, Hidden Cost of Inconsistent Decision Making”).

The BIN model highlights three levers for improving forecast accuracy: Reducing Bias, Improving Information, and Reducing Noise. Let’s look at some effective techniques in each of these areas.

HOW TO REDUCE BIAS

The first point to make about bias reduction is that a considerable body of research has concluded that this is very difficult to do, for the simple reason that deep in our evolutionary past, what we negatively refer to as “biases” served a positive evolutionary purpose (e.g., overconfidence helped to attract mates).

That said, both our experience with Britten Coyne Partners’ clients and academic research has found that two techniques are often effective.

Reference/Base Rates and Shrinkage: Too often we behave as if the only information that matters is what we know about the question or results we are trying to forecast. We fail to take into account how things have turned out in similar cases in the past (this is know as the reference or base rate). So-called “shrinkage” methods start by identifying a relevant base rate for the forecast, then move on to developing a forecast based on the specific situation under consideration. The more similar the specific situation is to the ones used to calculate the base rate, the more the specific probability is “shrunk” towards the base rate probability.

Pre-Mortem Analysis: Popularized by Gary Klein, in a pre-mortem a team is told to assume that it is some point in the future, and a forecast (or plan) has failed. They are told to anonymously write down the causes of the failure, including critical signals that were missed, and what could have been done differently to increase the probability of success. Pre-mortems reduce over-optimism and overconfidence, and produce two critical outputs: improvement in forecasts and plans, and the identification of critical uncertainties about which more information needs to be collected as the future unfolds (which improves signal quality). The power of pre-mortems is due to the fact that humans have a much easier time (and are much more detailed) in explaining the past than they are when asked to forecast the future – hence the importance of situating a group in the future, and asking them to explain a past that has yet to occur.

HOW TO INCREASE RELEVANT INFORMATION (SIGNAL)?

Hyperconnectivity has unleashed upon us a daily flood of information with which many people are unable to cope when they have to make critical decisions (and forecasts) in the face of uncertainty. Two approaches can help.

Information Value Analysis: Bayes Theory provides a method for separating high value signals from the flood of noise that accompanies them. Let’s say that you have determined that three outcomes (A, B, and C) are possible. The value of a new piece of information (or related pieces of evidence) can be determined based on the likelihood you would observe it if Outcomes A, B, or C happen. If the information is much more likely to be observed in the case of just one outcome, it has high value. If it is equally likely under all three outcomes, it has no value. More difficult, but just as informative, is applying this same logic to the absence of a piece of information. This analysis (and the outcome probability estimates) should be repeated at regular intervals to assess newly arriving evidence.

Assumptions Analysis: Probabilistic forecasts rest on a combination of (1) facts, (2) assumptions about critical uncertainties, (3) the evidence (of varying reliability and information value) supporting those assumptions, and (4) the logic used to reach the forecaster’s conclusion. In our forecasting work with clients over the years, we have found that discussing the assumptions made about critical uncertainties, and, less frequently the forecast logic itself, generates very productive discussions and improves predictive accuracy.

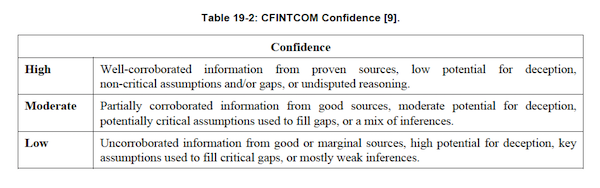

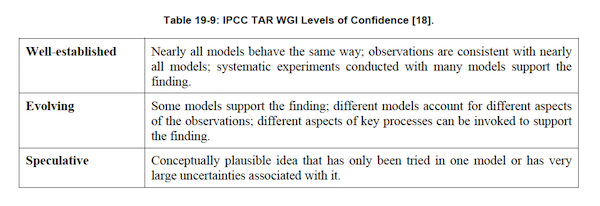

In particular, Marvin Cohen’s approach has proved quite practical. His research found that the greater the number of assumptions about “known unknowns” (i.e., recognized uncertainties) that underlie a forecast, and the weaker the evidence that supports them, the lower confidence one should have in the forecast’s accuracy.

Also, the more assumptions about “known unknowns” that are used in a forecast, the more likely it is that potentially critical “unknown unknowns” remain to be discovered, which again should lower your confidence in the forecast (e.g., see, “Metarecognition in Time-Stressed Decision Making: Recognizing, Critiquing, and Correcting” by Cohen, Freeman, and Wolf).

HOW TO REDUCE NOISE?

Combine and Extremize Forecasts: Research has found that three steps can improve forecast accuracy. The first is seeking forecasts based on different forecasting methodologies, or prepared by forecasters with significantly different backgrounds (as a proxy for different mental models and information). The second is combining those forecasts (using a simple average if few are included, or the median if many are). The final step, which significantly improved the performance of the Good Judgment Project team in the IARPA forecasting tournament, is to “extremize” the average (mean) or median forecast by moving it closer to 0% or 100%.

Averaging forecasts assumes that the differences between them are all due to noise. However, as the number of forecasts being combined increases, use of the median produces the greatest increase in accuracy because it does not “average away” all information differences between forecasters. However, that still leaves whatever bias is present in the median forecast.

Forecasts for binary events (e.g., the probability an event will or will not happen within a given time frame) are most useful to decision makers when they are closer to 0% or 100% rather than the uninformative “coin toss” estimate of a 50% probability. As described by Baron et al in “Two Reasons to Make Aggregated Probability Forecasts More Extreme”, individual forecasters will often shrink their probability estimates towards 50% to take into account their subjective belief about the extent of potentially useful information that they are missing.

For this reason, forecast accuracy can usually be increased when you employ a structured “extremizing” technique to move the mean or median probability estimate closer to 0% or 100%. Note that the extremizing factor should be lower when average forecaster expertise is higher. This is based on the assumption that a group of expert forecasters will incorporate more of the full amount of potentially useful information than will novice forecasters. (See: “Two Reasons to Make Aggregated Probability Forecasts More Extreme”, by Baron et al, and “Decomposing the Effects of Crowd-Wisdom Aggregators”, by Satopaa et al).

Use a Forecasting Algorithm: Use of an algorithm (whose structure can be inferred from top human forecasters’ performance) ensures that a forecast’s information inputs and their weighting are consistent over time. In some cases, this approach can be automated; in others, it involves having a group of forecasters (e.g., interviewers of new hire candidates) ask the same set of questions and use the same rating scale, which facilitates the consistent combination of their inputs. Kahneman has also found that testing these algorithmic conclusions against forecasters’ intuition, and then examining the underlying reasons for any disagreements between the results of the two methods can sometimes improve results.

However, it is also critical to note that this algorithmic approach implicitly assumes that the underlying process generating the results being forecast is stable over time. In the case of complex adaptive systems (which are constantly evolving), this is not true.

Unfortunately, many of the critical forecasting challenges we face involve results produced by complex adaptive systems. For the foreseeable future, expert human forecasters will still be needed to meet them. By decomposing the root causes of forecasting error, the BIN model will help them to do that.

Improving clients’ (and our own) forecasting process to increase predictive accuracy is critical to our mission and our research in this area is ongoing. In this note, we’ll review a very interesting new paper that has the potential to significantly improve forecast accuracy.

In “Bias, Information, Noise: The BIN Model of Forecasting”, Satopaa, Salikhov, Tetlock, and Mellers introduce a new approach to rigorously assessing the impact of three root causes of forecasting errors. In so doing, they create the opportunity for individuals and organizations to take more carefully targeted actions to improve the predictive accuracy of their forecasts.

In the paper, Satopaa et al decompose forecast errors into three parts, based on the impact of bias, partial information, and noise. They assume that, “forecasters sample and interpret signals with varying skill and thoroughness. They may sample relevant signals (increasing partial information) or irrelevant signals (creating noise). Furthermore, they may center the signals incorrectly (creating bias).”

Let’s start by taking a closer look at each root cause.

WHAT IS BIAS?

A bias is a systematic error that reduces forecast accuracy in a predictable way. Researchers have extensively studied biases and many of them have become well known. Here are some examples:

Over-Optimism: Tali Sharot’s research has shown how humans have a natural bias towards optimism. We are much more prone to updating our beliefs when a new piece of information is positive (i.e., better than expected in light of our goals) rather than negative (“How Unrealistic Optimism is Maintained in the Face of Reality”).

Confirmation: We tend to seek, pay more attention to, and place more weight on information that supports our current beliefs than information that is inconsistent with or contradicts them (this is also known as “my-side” bias).

Social Ranking: Another bias that is deeply rooted in our evolutionary past is the predictable impact of competition for status within a group. Researchers have found that when the result of a decision will be private (not observed by others), we tend to be risk averse. But when the result will be observed, we tend to be risk seeking (e.g., “Interdependent Utilities: How Social Ranking Affects Choice Behavior” by Bault et al).

Social Conformity: Another evolutionary instinct comes into play when uncertainty is high. Under this condition, we are much more likely to rely on social learning and copying the behavior of other group members, and to put less emphasis on any private information we have that is inconsistent with or contradicts the group’s dominant view. The evolutionary basis for this heightened conformity is clear – you don’t want to be cast out of your group when uncertainty is high.

Overconfidence/Uncertainty Neglect: The over-optimism, confirmation, social ranking, and social conformity biases all contribute to forecasters’ systematic neglect of when we make and communicate forecasts. We described this bias (and how to overcome it) much more detail in our February blog post, “How to Effectively Communicate Forecast Probability and Analytic Confidence”.

Surprise Neglect: While less well known than many other biases, this one is, in our experience, one of the most important. Surprise is a critically important feeling that is triggered when our conscious or unconscious attention is attracted to something that violates our expectations of how the world should behave, given our mental model of the phenomenon in question (e.g., stock market valuations increasing when macroeconomic conditions appear to be getting worse). From an evolutionary perspective, surprise helps humans to survive by forcing them to revise their mental models in order to more accurately perceive the world – especially its unexpected dangers and opportunities. Unfortunately, the feeling of surprise is often fleeting.

As Daniel Kahneman noted in his book, “Thinking Fast and Slow”, when confronted with surprise, our automatic, subconscious reasoning system (“System 1”) will quickly attempt to adjust our beliefs to eliminate the feeling. It is only when the adjustment is too big that our conscious reasoning system (“System 2”) is triggered to examine its cause us to feel surprised – but even then, System 1 will still keep trying to make the feeling of surprise disappear. Surprise neglect is one of the most underappreciated reasons that inaccurate mental models tend to persist.

WHAT IS PARTIAL INFORMATION?

In the context of the BIN Model, “information” refers to the extent to which we have complete (and accurate) information about the process generating the future results we seek to forecast.

For example, when a fair coin is flipped four times, we have complete information that enables us to predict the probability of different outcomes with complete confidence. This is the realm of decision in the face of risk.

When the complexity of the results generating process increases, we move from the realm of risk into the realm of uncertainty, in which we often do not fully understand the full range of possible outcomes, their probabilities, or their consequences.

Under these circumstances, forecasters have varying degrees of information about the process generating future results, and/or models of varying degrees of accuracy for interpreting the meaning of the information they have. Both contribute to forecast inaccuracy.

WHAT IS NOISE?

“Noise” is unsystematic, unpredictable, random errors that contribute to forecast inaccuracy. Kahneman defines it as “the chance variability of judgments.”

Sources of noise that are external to forecasters include randomness in the results generating process itself (and, as in the case of complex adaptive systems, the deliberate actions of the intelligent agents who comprise that process). Internal sources of noise include forecasters’ use of low or no value information about and/or a model of a results generating process that are either inaccurate or irrelevant, or that vary over time (often unconsciously).

After applying their analytical “BIN” framework to the results of the Good Judgment Project (a four-year geopolitical forecasting tournament described in the book “Superforecasting” in which one of us participated), Satopaa and his co-authors conclude that, “forecasters fall short of perfect forecasting [accuracy] due more to noise than bias or lack of information. Eliminating noise would reduce forecast errors … by roughly 50%; eliminating bias would yield a roughly 25% cut; [and] increasing information would account for the remaining 25%. In sum, from a variety of analytical angles, reducing noise is roughly twice as effective as reducing bias or increasing information.”

Moreover, they authors found that a variety of interventions used by the Good Judgment Project that were intended to reduce forecaster bias (such as training and teaming) actually had their biggest impact on reducing noise. They note that, “reducing bias may be harder than reducing noise due to the tenacious nature of certain cognitive biases” (a point also made by Kahneman in his Harvard Business Review article, “Noise: How to Overcome the High, Hidden Cost of Inconsistent Decision Making”).

The BIN model highlights three levers for improving forecast accuracy: Reducing Bias, Improving Information, and Reducing Noise. Let’s look at some effective techniques in each of these areas.

HOW TO REDUCE BIAS

The first point to make about bias reduction is that a considerable body of research has concluded that this is very difficult to do, for the simple reason that deep in our evolutionary past, what we negatively refer to as “biases” served a positive evolutionary purpose (e.g., overconfidence helped to attract mates).

That said, both our experience with Britten Coyne Partners’ clients and academic research has found that two techniques are often effective.

Reference/Base Rates and Shrinkage: Too often we behave as if the only information that matters is what we know about the question or results we are trying to forecast. We fail to take into account how things have turned out in similar cases in the past (this is know as the reference or base rate). So-called “shrinkage” methods start by identifying a relevant base rate for the forecast, then move on to developing a forecast based on the specific situation under consideration. The more similar the specific situation is to the ones used to calculate the base rate, the more the specific probability is “shrunk” towards the base rate probability.

Pre-Mortem Analysis: Popularized by Gary Klein, in a pre-mortem a team is told to assume that it is some point in the future, and a forecast (or plan) has failed. They are told to anonymously write down the causes of the failure, including critical signals that were missed, and what could have been done differently to increase the probability of success. Pre-mortems reduce over-optimism and overconfidence, and produce two critical outputs: improvement in forecasts and plans, and the identification of critical uncertainties about which more information needs to be collected as the future unfolds (which improves signal quality). The power of pre-mortems is due to the fact that humans have a much easier time (and are much more detailed) in explaining the past than they are when asked to forecast the future – hence the importance of situating a group in the future, and asking them to explain a past that has yet to occur.

HOW TO INCREASE RELEVANT INFORMATION (SIGNAL)?

Hyperconnectivity has unleashed upon us a daily flood of information with which many people are unable to cope when they have to make critical decisions (and forecasts) in the face of uncertainty. Two approaches can help.

Information Value Analysis: Bayes Theory provides a method for separating high value signals from the flood of noise that accompanies them. Let’s say that you have determined that three outcomes (A, B, and C) are possible. The value of a new piece of information (or related pieces of evidence) can be determined based on the likelihood you would observe it if Outcomes A, B, or C happen. If the information is much more likely to be observed in the case of just one outcome, it has high value. If it is equally likely under all three outcomes, it has no value. More difficult, but just as informative, is applying this same logic to the absence of a piece of information. This analysis (and the outcome probability estimates) should be repeated at regular intervals to assess newly arriving evidence.

Assumptions Analysis: Probabilistic forecasts rest on a combination of (1) facts, (2) assumptions about critical uncertainties, (3) the evidence (of varying reliability and information value) supporting those assumptions, and (4) the logic used to reach the forecaster’s conclusion. In our forecasting work with clients over the years, we have found that discussing the assumptions made about critical uncertainties, and, less frequently the forecast logic itself, generates very productive discussions and improves predictive accuracy.

In particular, Marvin Cohen’s approach has proved quite practical. His research found that the greater the number of assumptions about “known unknowns” (i.e., recognized uncertainties) that underlie a forecast, and the weaker the evidence that supports them, the lower confidence one should have in the forecast’s accuracy.

Also, the more assumptions about “known unknowns” that are used in a forecast, the more likely it is that potentially critical “unknown unknowns” remain to be discovered, which again should lower your confidence in the forecast (e.g., see, “Metarecognition in Time-Stressed Decision Making: Recognizing, Critiquing, and Correcting” by Cohen, Freeman, and Wolf).

HOW TO REDUCE NOISE?

Combine and Extremize Forecasts: Research has found that three steps can improve forecast accuracy. The first is seeking forecasts based on different forecasting methodologies, or prepared by forecasters with significantly different backgrounds (as a proxy for different mental models and information). The second is combining those forecasts (using a simple average if few are included, or the median if many are). The final step, which significantly improved the performance of the Good Judgment Project team in the IARPA forecasting tournament, is to “extremize” the average (mean) or median forecast by moving it closer to 0% or 100%.

Averaging forecasts assumes that the differences between them are all due to noise. However, as the number of forecasts being combined increases, use of the median produces the greatest increase in accuracy because it does not “average away” all information differences between forecasters. However, that still leaves whatever bias is present in the median forecast.

Forecasts for binary events (e.g., the probability an event will or will not happen within a given time frame) are most useful to decision makers when they are closer to 0% or 100% rather than the uninformative “coin toss” estimate of a 50% probability. As described by Baron et al in “Two Reasons to Make Aggregated Probability Forecasts More Extreme”, individual forecasters will often shrink their probability estimates towards 50% to take into account their subjective belief about the extent of potentially useful information that they are missing.

For this reason, forecast accuracy can usually be increased when you employ a structured “extremizing” technique to move the mean or median probability estimate closer to 0% or 100%. Note that the extremizing factor should be lower when average forecaster expertise is higher. This is based on the assumption that a group of expert forecasters will incorporate more of the full amount of potentially useful information than will novice forecasters. (See: “Two Reasons to Make Aggregated Probability Forecasts More Extreme”, by Baron et al, and “Decomposing the Effects of Crowd-Wisdom Aggregators”, by Satopaa et al).

Use a Forecasting Algorithm: Use of an algorithm (whose structure can be inferred from top human forecasters’ performance) ensures that a forecast’s information inputs and their weighting are consistent over time. In some cases, this approach can be automated; in others, it involves having a group of forecasters (e.g., interviewers of new hire candidates) ask the same set of questions and use the same rating scale, which facilitates the consistent combination of their inputs. Kahneman has also found that testing these algorithmic conclusions against forecasters’ intuition, and then examining the underlying reasons for any disagreements between the results of the two methods can sometimes improve results.

However, it is also critical to note that this algorithmic approach implicitly assumes that the underlying process generating the results being forecast is stable over time. In the case of complex adaptive systems (which are constantly evolving), this is not true.

Unfortunately, many of the critical forecasting challenges we face involve results produced by complex adaptive systems. For the foreseeable future, expert human forecasters will still be needed to meet them. By decomposing the root causes of forecasting error, the BIN model will help them to do that.

Complexity, Wicked Problems, and AI-Augmented Decision Making

17/Mar/21 13:08

Over the years, some of the most thought provoking research we have read on the practical implications and applications of complex adaptive systems theory has come from people who have never received the recognition their thinking deserves. One is Dietrich Dorner and his team at Otto-Friedrich University in Bamberg, Germany (see his book, “The Logic of Failure”). Another is Anne-Marie Grisogono, who worked for years at Defense Science and Technology Australia and has recently left there for academia, at Flinders University in Adelaide, Australia.

Grisogono recently published “How Could Future AI Help Tackle Global Complex Problems?” It is a great synthesis of the challenges for decision makers posed by increasing complexity and how improving artificial intelligence technologies could one day help meet them.

She begins by noting that, “we can define intelligence as the ability to produce effective responses or courses of action that are solutions to complex problems—in other words, problems that are unlikely to be solved by random trial and error, and that therefore require the abilities to make finer and finer distinctions between more and more combinations of relevant factors and to process them so as to generate a good enough solution.”

Grisogono then links this definition of intelligence to the emergence and growth of complexity. “Obviously [finding good enough solutions] becomes more difficult as the number of possible choices increases, and as the number of relevant factors and the consequence pathways multiply. Thus complexity in the ecosystem environment generates selection pressure for effective adaptive responses to the [increasing] complexity.”

“One possible adaptive strategy is to find niches to specialize for, within which the complexity is reduced. The opposite strategy is to improve the ability to cope with the complexity by evolving increased intelligence at an individual level, or collective intelligence through various types of cooperative or mutualistic relationships. Either way, increased intelligence in one species will generally increase the complexity of the problems they pose for both other species in the shared ecosystem environment, and for their own conspecifics, driving yet further rounds of adaptations. Even when cooperative interactions evolve to deal with problems that are more complex than an individual can cope with, the shared benefits come with a further complexity cost”…

That said, “it is evident that human intelligence and ingenuity have led to immense progress in producing solutions for many of the pressing problems of past generations, such as higher living standards, longer life expectancy, better education and working conditions. But it is equally evident that the transformations they have wrought in human society and in the planetary environment include many harmful unintended consequences, and that the benefits themselves are not equitably distributed and have often masked unexpected downsides…

“This ratcheting dynamic of increasing intelligence and increasing complexity continues as long as two conditions are met: further increases in sensing and processing are sufficiently accessible to the evolutionary process, and the selection pressure is sufficient to drive it. Either condition can fail. Thus generally a plateau of dynamic equilibrium is reached. But it is also possible that under the right conditions, which we will return to below, the ratcheting of both complexity and intelligence may continue and accelerate.”

Grisogono then moves on to a fascinating and admirably succinct discussion of “what we have learned about the specific limitations that plague human decision-makers in complex problems. We can break this down into two parts: the aspects of complex problems that we find so difficult, and what it is about our brains that limits our ability to cope with those aspects.”